Michigan AI celebrates second annual symposium

The goal of the symposium is to facilitate conversations between AI practitioners from Michigan and beyond.

Enlarge

Enlarge

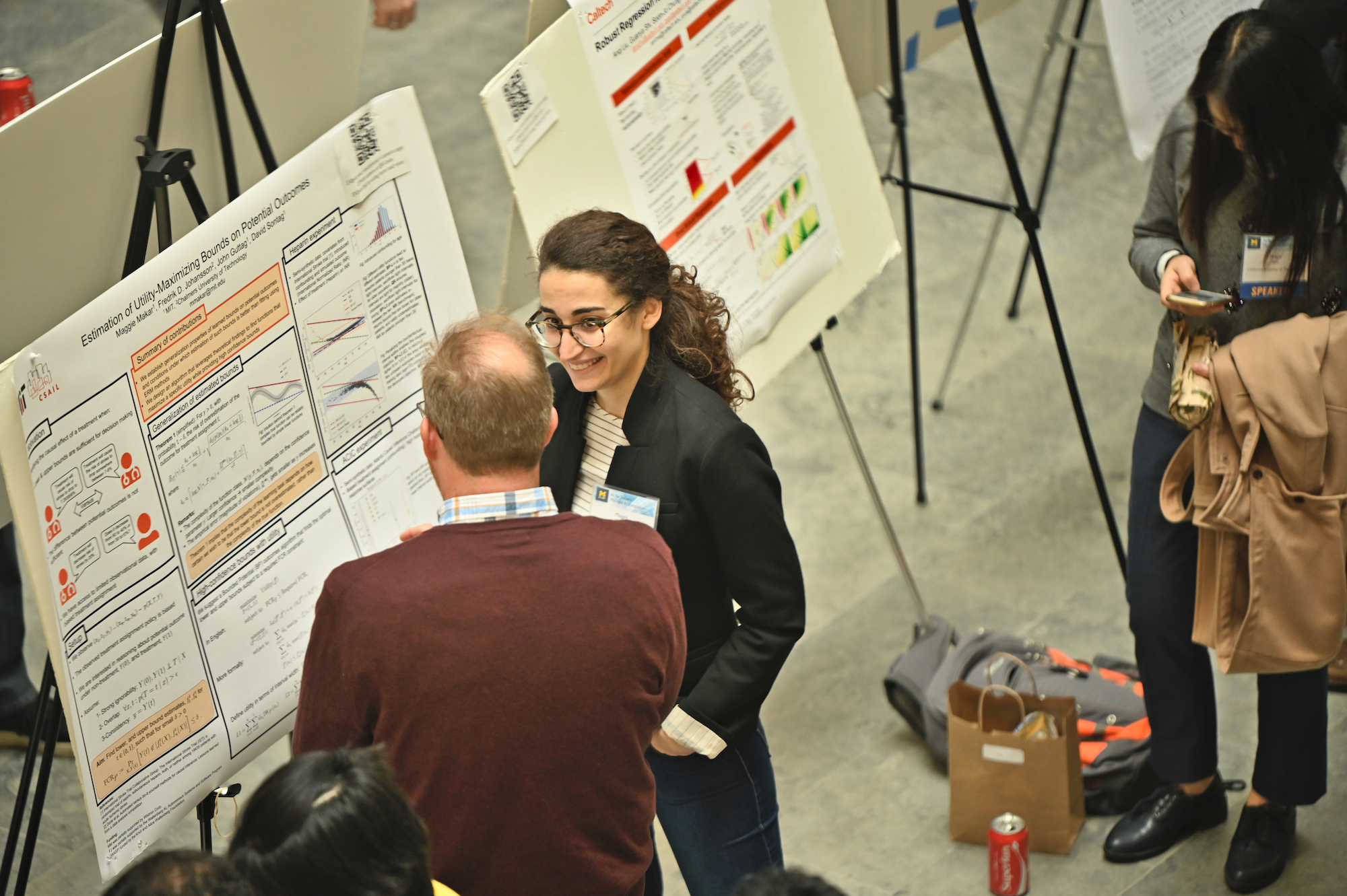

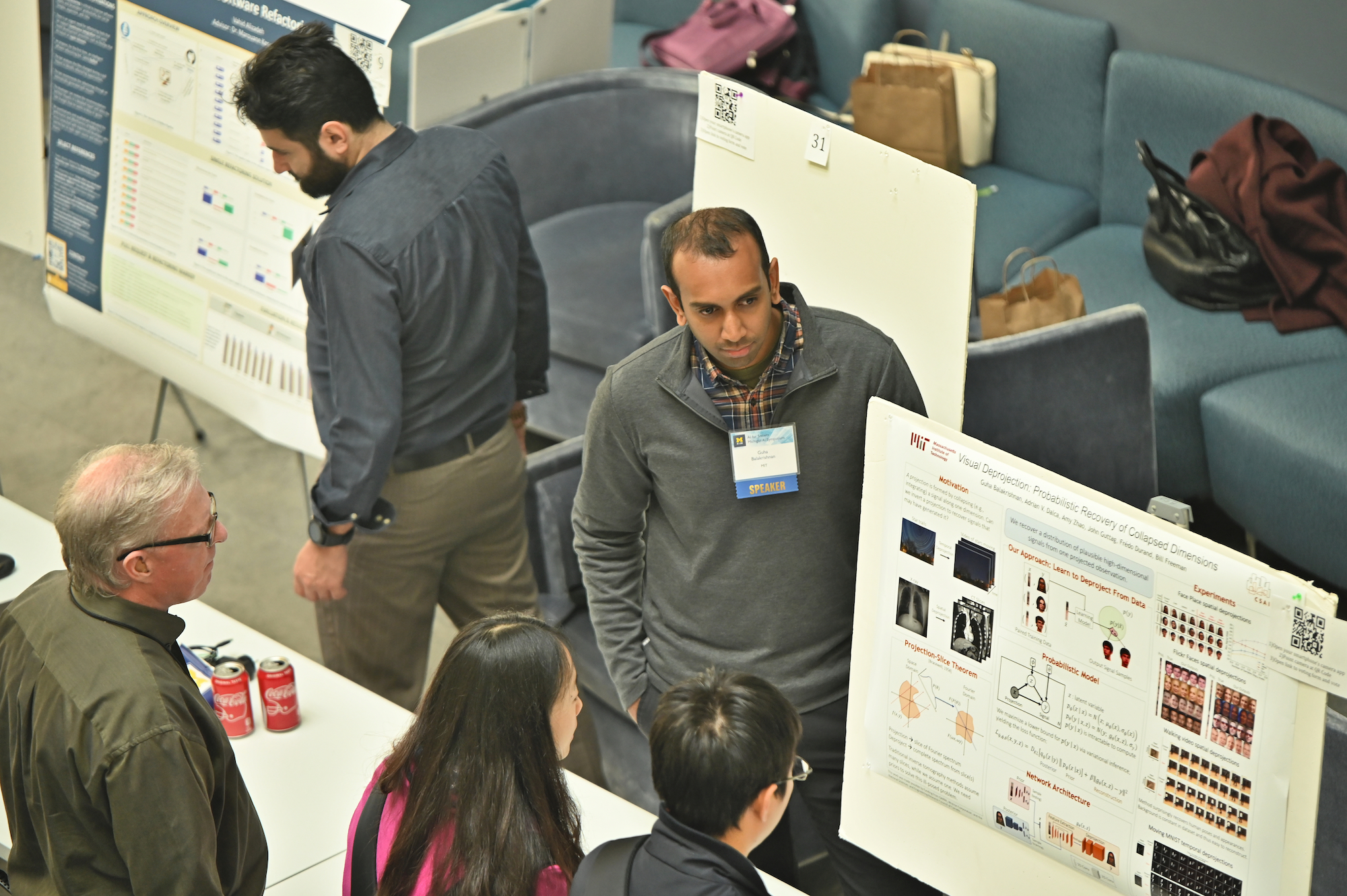

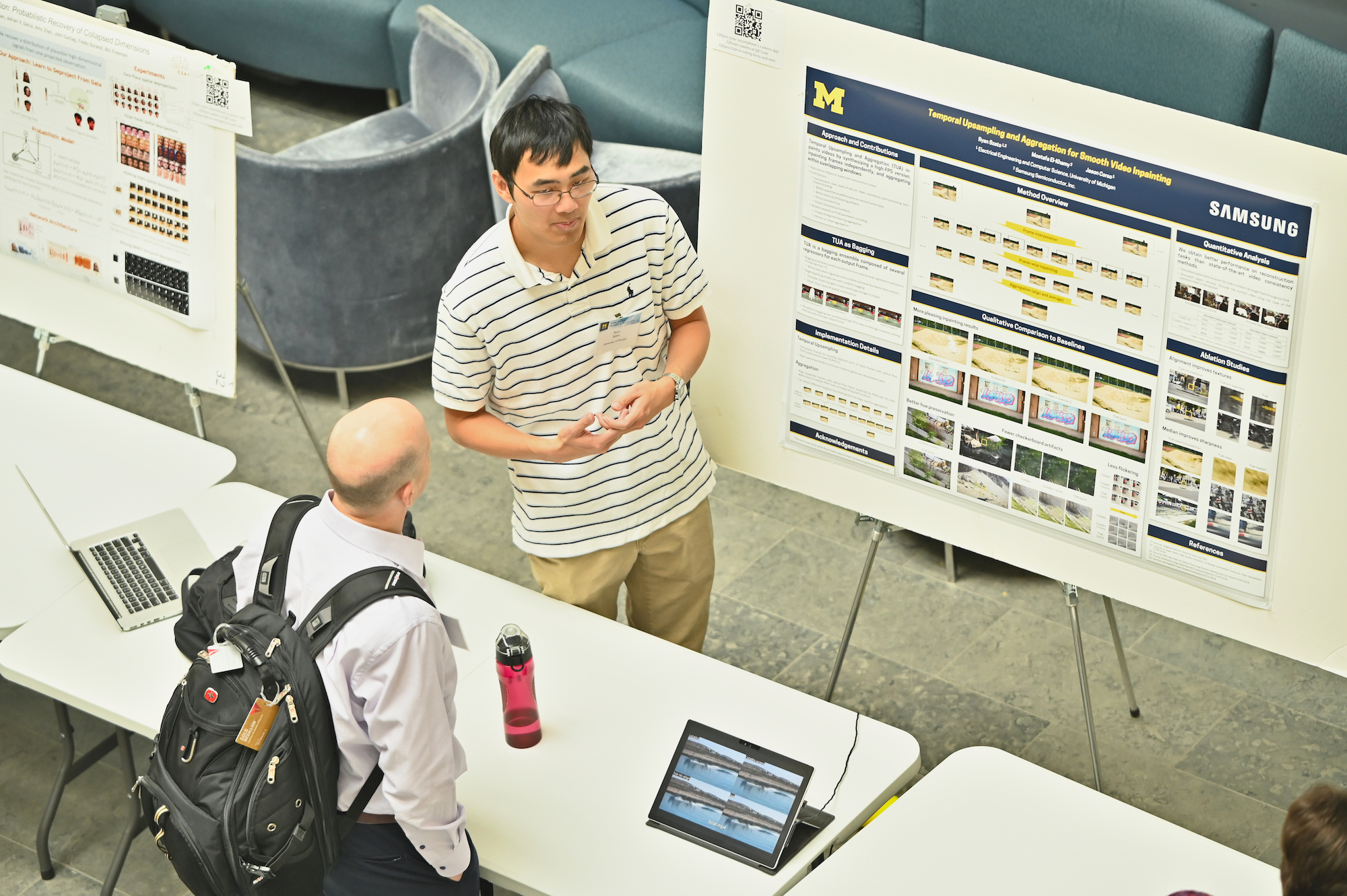

Over 300 Michiganders & friends from academia, industry, and the local community attended this year’s AI symposium, “AI for Society.” The event was held on Saturday, October 19, and welcomed its participants with research talks, posters, demos, and plenty of networking opportunities.

“Our goal with our annual Michigan AI Symposium is to facilitate conversations between researchers in AI at the University of Michigan and the broader community of AI practitioners from Michigan and beyond,” says Prof. Rada Mihalcea, general chair of Michigan AI Symposium and Director Michigan AI. “We have succeeded in this goal — there was a lot of positive energy surrounding the symposium and all the amazing AI research that was presented, and also a lot of excitement for the future of AI here at Michigan.”

The program featured two main sessions of faculty and invited talks, chaired by Profs. John Laird and Jenna Wiens, and a poster and demo session. A keynote talk was delivered by Prof. Doina Precup from McGill University, who also heads the Montreal office of Deepmind. The symposium concluded with a panel on AI & Ethics moderated by Prof. Ben Kuipers with Profs. Ella Atkins, HV Jagadish, and Michael Wellman on the panel.

At the end of the day, PhD student Preeti Ramaraj (who chaired the session together with PhD student Matthew Perez) presented awards to four outstanding demo and poster session presentations:

Best poster

Area: Speech and Language

“HEIDL: Learning Linguistic Expressions with Deep Learning and Human-in-the-Loop,”

Yiwei Yang (U-M, ME), Eser Kandogan (IBM Research), Yunyao Li (IBM Research), Walter S. Lasecki (U-M, CSE), Prithviraj Senn (IBM Research)

Best demo

Area: AI + X

“Pulse Wave Diagnosis”

Ovidiu Calin (EMU)

Most impactful to society

Area: AI + X

“Racial Discrimination in Policing: 5 Problems in 5 Minutes”

Emma Pierson (Stanford U.)

Most impactful to AI

Area: Core AI

“From Ontologies to Learning-Based Programs”

Quan Guo (MSU), Andrzej Uszok (IHMC), Yue Zhang (MSU), Parisa Kordjamshidi (MSU)

This event was possible thanks to the hard work of over 20 volunteers, students at Michigan AI (which you can spot dressed in the yellow AI T-shirts in the pictures), and the event coordinator, Aurelia Bunescu.

Below are titles and abstracts of the talks delivered by keynote speaker Doina Precup, Michigan AI faculty, and the invited guest speakers. These talks will soon be available to watch on the Michigan AI YouTube channel. Follow the Michigan AI Lab on Twitter and Facebook for updates. The list of posters and demos and more information about the symposium including its sponsors is available from the symposium webpage.

Keynote Address

Doina Precup

“Building Knowledge For AI Agents With Reinforcement Learning”

Abstract: Reinforcement learning allows autonomous agents to learn how to act in a stochastic, unknown environment, with which they can interact. Deep reinforcement learning, in particular, has achieved great success in well-defined application domains, such as Go or chess, in which an agent has to learn how to act and there is a clear success criterion. In this talk, I will focus on the potential role of reinforcement learning as a tool for building knowledge representations in AI agents whose goal is to perform continual learning. I will examine a key concept in reinforcement learning, the value function, and discuss its generalization to support various forms of predictive knowledge. I will also discuss the role of temporally extended actions, and their associated predictive models, in learning procedural knowledge. Finally, I will discuss the challenge of how to evaluate reinforcement learning agents whose goal is not just to control their environment, but also to build knowledge about their world.

AI Faculty Talks

Ella Atkins

“AI for Improved In-Flight Situational Awareness and Safety”

Abstract: Traditional sensor data can be augmented with new data sources such as roadmaps and geographical information system (GIS) Lidar/video to offer emerging unmanned aircraft systems (UAS) and urban air mobility (UAM) a new level of situational awareness. This presentation will summarize my group’s research to identify, process, and utilize GIS, map, and other real-time data sources during nominal and emergency flight planning. Specific efforts have utilized machine learning to automatically map flat rooftops as urban emergency landing sites, incorporate cell phone data into an occupancy map for risk-aware flight planning, and extend airspace geofencing into a framework capable of managing all traffic types in complex airspace and land use environments.

David Fouhey

“Recovering a Functional and Three Dimensional Understanding of Images”

Abstract: “What does it mean to understand an image? One common answer in computer vision has been that understanding means naming things: this part of the image corresponds to a refrigerator and that to a person, for instance. While important, the ability to name is not enough: humans can effortlessly reason about the rich 3D world that images depict and how this world functions and can be interacted with. For example, just looking at an image, we know what surfaces we could put a cup on, what would happen if we tugged on all the handles in the image, and what parts of the image could be picked up and moved. A computer, on the other hand, understands none of this. My research aims to address this by giving computers the ability to understand these 3D and functional (or interactive) properties. In this talk, I’ll highlight some of my efforts towards building this understanding in terms of modeling the 3D world and understanding how humans interact with it”

Joyce Chai

“Robot Learning through Language Communication”

Abstract: Language communication plays an important role in human learning and knowledge acquisition. With the emergence of a new generation of cognitive robots, empowering these robots to learn directly from human partners becomes increasingly important. In this talk, I will give a brief introduction to some on-going work in my lab on communicative learning where humans can teach robots new environment and new tasks through natural language communication and action demonstration.

Justin Johnson

“From Recognition to Reasoning”

Abstract: In recent years deep learning systems have become proficient at recognition problems: for example we can build systems that can recognize objects in images with high accuracy. But recognition is only the first step toward intelligent behavior. I am interested in solving problems that move from recognition to reasoning: systems should be able not only to recognize, but also to perform higher-level tasks based on what they perceive. I will showcase three projects that aim to jointly recognize and reason using end-to-end deep learning systems: predicting 3D shapes of objects from a single image, answering complex natural-language questions about images, and learning to solve interactive physics puzzles.

Edwin Olson

“AI and robots in the real world”

Abstract: Building AI systems can be slow work– it takes time to do the development, curate data sets, train, and test them. After iterating through this process, we can end up with a system that works quite well under the specific conditions for which the system was intended. But the real world can spring surprise after surprise at our systems. These “edge cases” are often brushed aside as evidence that we just need more data (or a slightly fancier model), which then takes yet more time to develop. How can we develop AI systems whose capabilities are fundamentally more modular and extensible, and that are better able to handle the surprises of the real world?

In this talk, I’ll describe our approach to building systems that must handle whatever the real-world throws at them– an approach based around real-time simulation to decide between multiple competing AI strategies. A significant advantage of our approach is that the system is modular and extensible– new capabilities can be added with a relatively modest amount of development time. I’ll describe the basic approach and some applications to on-road autonomous vehicles and teams of robots that play tag.

Invited Guest Speakers

Emma Pierson

AI + X

“Racial discrimination in policing: 5 problems in 5 minutes”

Abstract: Based on our work compiling and analyzing a dataset of more than 100 million police traffic stops, I discuss some of the challenges in statistically assessing discrimination in policing.

Angie Liu

Machine Learning

“Machine Learning for the Real World: Provably Robust Extrapolation”

Abstract: The unprecedented prediction accuracy of modern machine learning beckons for its application in a wide range of real-world applications, including autonomous robots, medical decision making, scientific experiment design, and many others. A key challenge in such real-world applications is that the test cases are not well represented by the pre-collected training data. To properly leverage learning in such domains, especially safety-critical ones, we must go beyond the conventional learning paradigm of maximizing average prediction accuracy with generalization guarantees that rely on strong distributional relationships between training and test examples.

In this talk, I will describe a robust learning framework that offers rigorous extrapolation guarantees under data distribution shift. This framework yields appropriately conservative yet still accurate predictions to guide real-world decision-making and is easily integrated with modern deep learning. I will showcase the practicality of this framework in an application on agile robotic control. I will conclude with a survey of other applications as well as directions for future work.

Katarina Kann

Speech & Language

“Low-Resource Languages: A Challenge for Natural Language Processing”

Abstract: Low-resource languages constitute a challenge for state-of-the-art natural language processing (NLP) systems for a variety of reasons. First of all, while most research in NLP is focussing on English, many low-resource languages are typologically different from English, such that systems are often not trivially applicable, even with the help of translation. Another problem is that, as deep learning is getting increasingly popular in NLP research, many recently developed computational models require huge amounts of training data.

In this talk, I will discuss an approach for tackling the data sparsity problem low-resource languages are facing: transductive auxiliary task self-training, a combination of self-training and multi-task training.

Sarah Brown

AI + X

“Wiggum: Simpson’s Paradox Inspired Fairness Forensics”

Abstract: Both technical limitations and social critiques of current approaches to fair machine learning motivate the need for more collaborative, human driven approaches. We aim to empower human experts to conduct more critical exploratory analyses and support informal audits.

I will present Wiggum, a data exploration package with a visual analytics interface for Simpson’s paradox inspired fairness forensics. Wiggum detects and ranks multiple forms of Simpson’s Paradox and related relaxations.

Guha Balakrishnan

Computer Vision

“Visual Deprojection: Probabilistic Recovery of Collapsed Image Dimensions”

Abstract: We introduce visual deprojection: the task of recovering an image or video that has been collapsed (e.g., integrated) along a dimension. Projections arise in various contexts, such as long-exposure photography, non-line-of-sight imaging, and X-rays. We first propose a probabilistic model capturing the ambiguity of this highly ill-posed task. We then present a variational inference strategy using convolutional neural networks as functional approximators. Sampling from the network at test time yields plausible candidates from the distribution of original signals that are consistent with a given input projection. We first demonstrate that the method can recover many details of human gait videos and face images from their spatial projections, a surprising finding with implications for indirect imaging, tomography and understanding natural image structure. We then show that the method can recover videos of moving digits from dramatically motion-blurred images obtained via temporal projection.

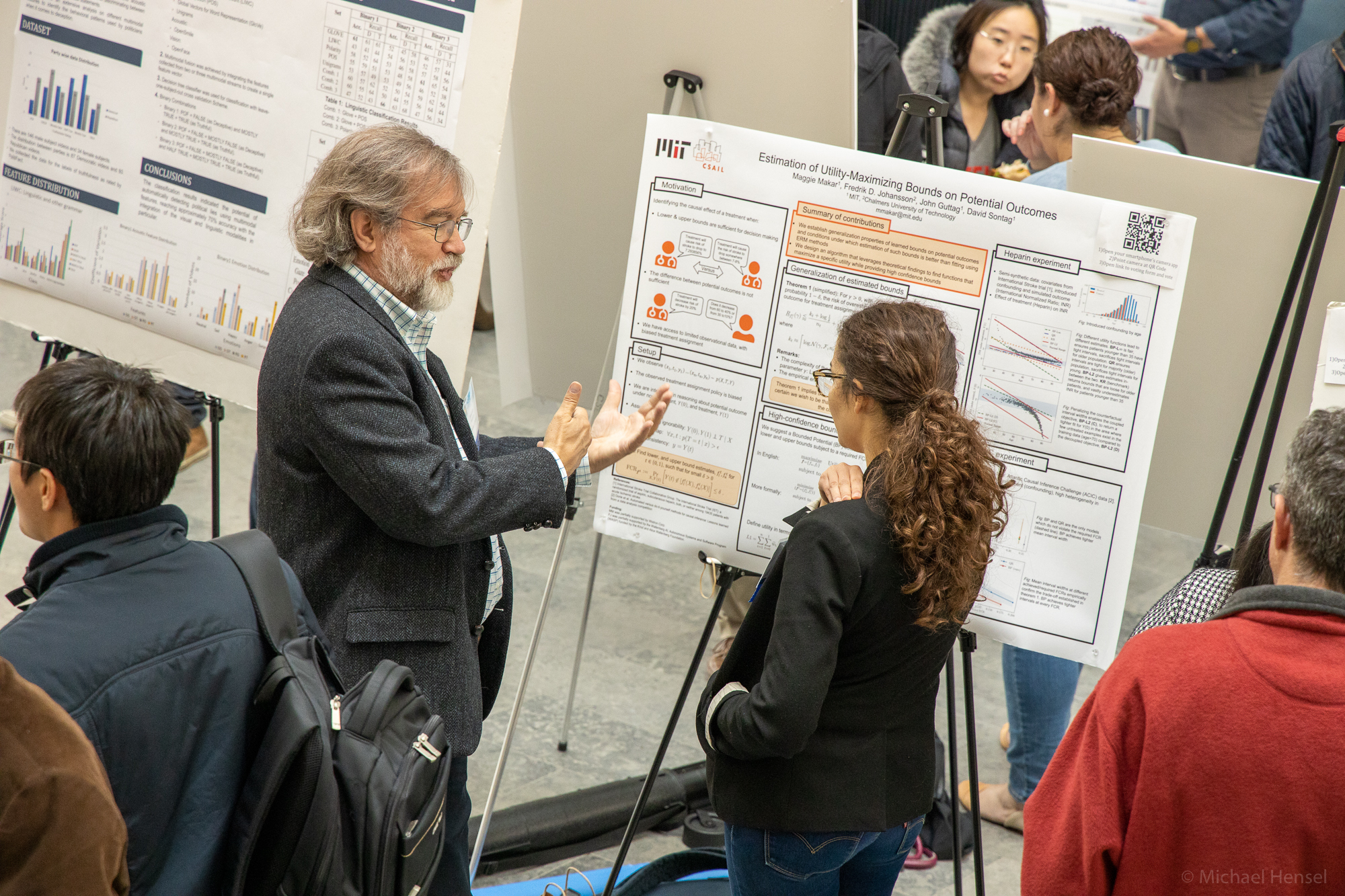

Maggie Makar

Machine Learning

“Data efficient individual treatment effect estimation”

Abstract: The potential for using machine learning algorithms as a tool for suggesting optimal interventions has fueled significant interest in developing methods for estimating individual treatment effects (ITEs) from observational data. However, in many realistic applications, the amount of data available at either training or deployment time is limited leading to unreliable estimates, and faulty decision making. My work focuses on designing causal inference methods that (a) minimize the required data collection at test time and (b) efficiently learn causal effects in finite samples.

While several methods for estimating ITEs have been recently suggested, these methods assume no constraints on the availability of data at the time of deployment or test time. This assumption is unrealistic in settings where data acquisition is a significant part of the analysis pipeline, meaning data about a test case has to be collected in order to predict the ITE. In my work, I have developed Data Efficient Individual Treatment Effect Estimation (DEITEE), a method which exploits the idea that adjusting for confounding, and hence collecting information about confounders, is not necessary at test time. DEITEE achieves significant reductions in the number of variables required at test time with little to no loss in accuracy.

In addition to data scarcity at test time, limited information at training time pose a challenge for accurate ITE estimation. In my work, I exploit the fact that in many real-world applications it may be sufficient to the decision maker to have upper and lower bounds on the potential outcomes of decision alternatives, allowing them to decide on the optimal trade-off between benefit and risk. With this in mind, I developed a machine learning approach for directly learning upper and lower bounds on the potential outcomes under treatment and non-treatment. Theoretical analysis highlights a trade-off between the complexity of the learning task and the confidence with which the resulting bounds cover the true potential outcomes; the more confident we wish to be, the more complex the learning task is. Empirical analysis shows that exploiting this trade-off allows us to get more reliable estimates in finite samples.

MENU

MENU