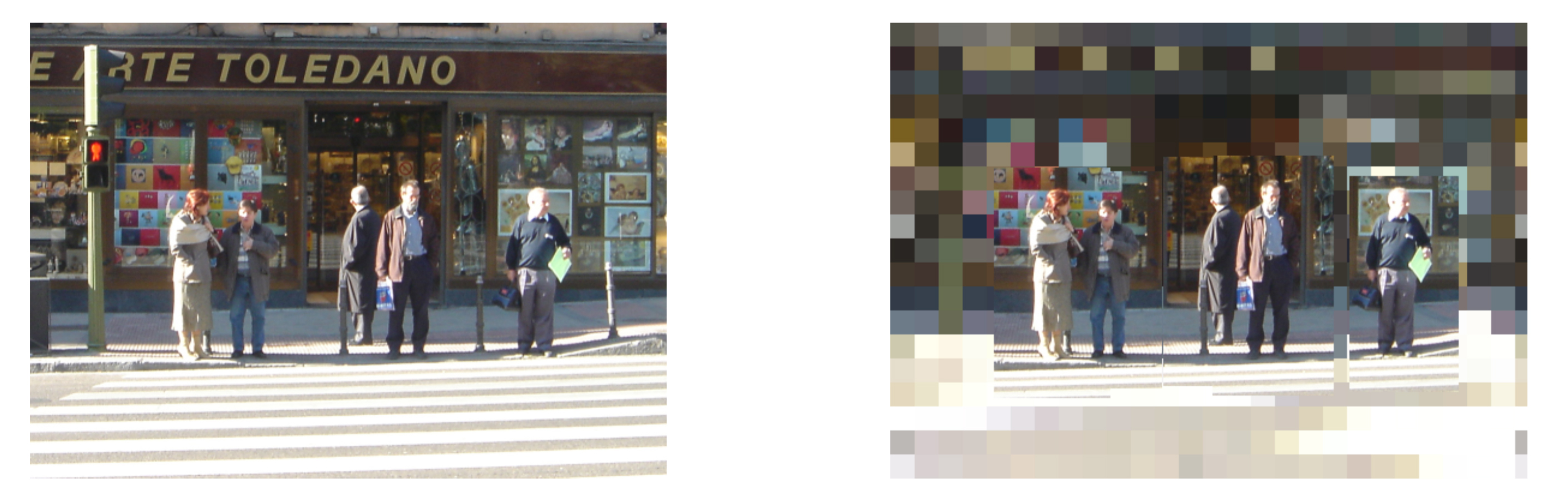

More efficient machine vision technology modeled on human vision

Prof. Robert Dick and advisee Ekdeep Singh Lubana developed a new technique that significantly improves the efficiency of machine vision applications

Enlarge

Enlarge

Prof. Robert Dick and Ekdeep Singh Lubana at the University of Michigan have developed a more efficient technique for machine vision by modeling it on human vision. By simply manipulating a camera’s firmware, the technique cuts energy consumption by 80% and has almost no impact upon accuracy when used for practical vision applications, like license plate recognition and facial recognition. Called “Digital Foveation,” it’s also faster than conventional methods.

“It’ll make new things and things that were infeasible before, practical,” Dick said. “Instead of having to change a battery once a week, for example, it’ll work for five weeks.”

Whether helping inspect and sort products or determining whether the object in front of a driverless car is a pedestrian or a paper bag, machine vision plays a critical role in our increasingly automated life. It has had a tremendous impact on security, healthcare, banking, transportation, and industry, and its applications are expected to have a market value of $15.46 billion by 2022.

It'll make new things and things that were infeasible before, practical.

Prof. Robert Dick

However, today’s machine vision is limited. Due to the power and computational demands of machine vision algorithms, even the simple task of recognizing license plates can be demanding for a computer.

Currently, when computers visually analyze a scene, they use a camera to capture a uniform, high-resolution image, and then they transfer the data over to an application’s processor. The processor runs image classification algorithms, and it costs a lot of energy and time to transfer and process all the data.

Humans, however, gather and process visual information in a far more efficient manner. Human retinas have only a small area supporting high-resolution vision, which is a central, dense sensing region called the “fovea.” By looking around, humans are able to see different parts of their surroundings at different resolutions, capturing the most important areas at high resolution while using low-resolution capture for less-important details. This information is then pieced together to draw inferences about the scene.

Enlarge

Enlarge

Dick’s team modified a computer camera so it would mimic humans by only capturing high-resolution information within a certain bounding box. Those data are sent to an application processor, which uses a machine learning algorithm to assess the information. If it needs more information, it tells the camera where to look next to gather more detail.

While previous researchers have tried to model machine vision on biological organisms, their methods required cumbersome and often unreliable systems. For example, researchers have used variable-resolution camera lenses and gimbals to mechanically turn the camera. Dick and Lubana accomplish the same goal by manipulating the camera’s firmware and the machine learning algorithms, making their technique – called “Digital Foveation” after the fovea that inspired the technique – much easier to use in practice.

Dick and Lubana tested the technique on license plate recognition because it’s straightforward to compare with prior techniques. According to the researchers, the application accounts for 100-billion image captures per year. Future work will expand the technique to other applications, including video.

The research was published as “Digital Foveation: an Energy-Aware Machine Vision Framework” by Dick and Lubana and presented at the 2018 International Conference on Hardware/Software Codesign and System Synthesis. Their follow-up work on capturing only the data needed for machine vision task accuracy is scheduled to appear at the 2019 Data Compression Conference.

MENU

MENU