Yelin Kim wins Best Student Paper Award at ACM Multimedia 2014 for research in facial emotion recognition

She computationally measures, represents, and analyzes human behavior data to illuminate fundamental human behavior and emotion perception, and develop natural human-machine interfaces.

Enlarge

Enlarge

Yelin Kim, graduate student in Electrical Engineering:Systems, has won the Best Student Paper Award at the 22nd ACM International Conference on Multimedia (ACM MM 2014) for her research in facial emotion recognition. The paper, “Say Cheese vs. Smile: Reducing Speech-Related Variability for Facial Emotion Recognition,” was co-authored by her advisor, Prof. Emily Mower Provost.

According to the researchers, applications for emotion recognition systems include social and affective human-machine interaction systems; wellness and health-related systems that help individuals to better monitor their emotional landscape; as well as surveillance systems that can automatically detect and alarm potentially dangerous human behaviors.

The expression of emotion is complex and can be difficult to decipher with a computer system. Emotion can be expressed through changes in facial movement, vocal expressions, and even through body gestures. However, some of these same changes occur simply through the process of articulation. For example, is someone with a smile on their face happy, or are they simply saying “cheese”?

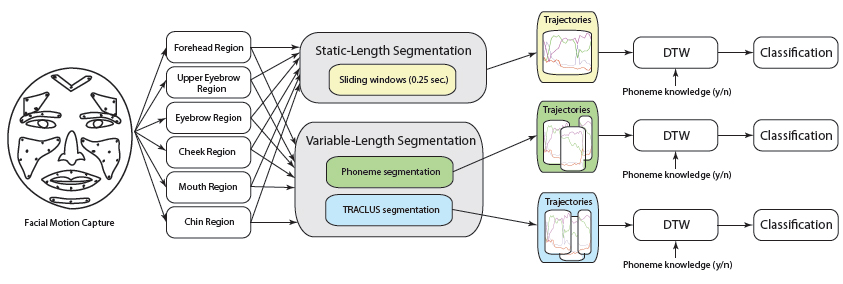

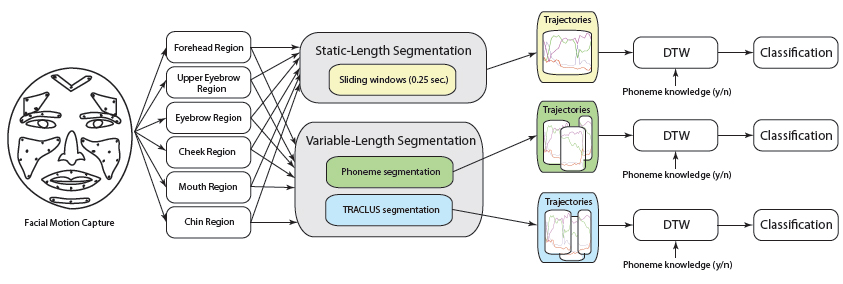

The goal of this research is to account for such speech-related variability when determining the emotion expressed by an individual’s face. The researchers have found that proper temporal segmentation is critical for emotion recognition. However, a key challenge with facial movement segmentation is that it often requires knowledge of what an individual is saying at any given time, and this transcription can be costly to obtain.

Enlarge

Enlarge

The authors describe a new unsupervised segmentation strategy that bridges the gap in accuracy between segmentation based on transcription and traditional window-based segmentation, leading to improved facial emotion recognition.

The researchers would like to thank the EECS community for their contributions to the research. In particular, Dr. Donald Winsor and Laura Fink offered computing resources, and members of the the CHAI lab (Computational Human-Centered Analysis and Integration Laboratory) as well as many fellow graduate students provided helpful comments on the paper.

Yelin Kim is a Ph.D. candidate in the Computational Human-Centered Analysis and Integration Laboratory, working with Professor Emily Mower Provost since 2012. She received her B.S. in Electrical and Computer Engineering from Seoul National University, South Korea in 2011, and her M.S. in Electrical Engineering-Systems from the University of Michigan, Ann Arbor in 2013. Her research interests are in computational human behavior analysis and affective computing by utilizing multimodal (speech and video) signal processing, and machine learning techniques. She computationally measures, represents, and analyzes human behavior data (e.g. vocal and facial expressions, and body gestures) to illuminate fundamental human behavior and emotion perception, and develop natural human-machine interfaces.

MENU

MENU